Source Code

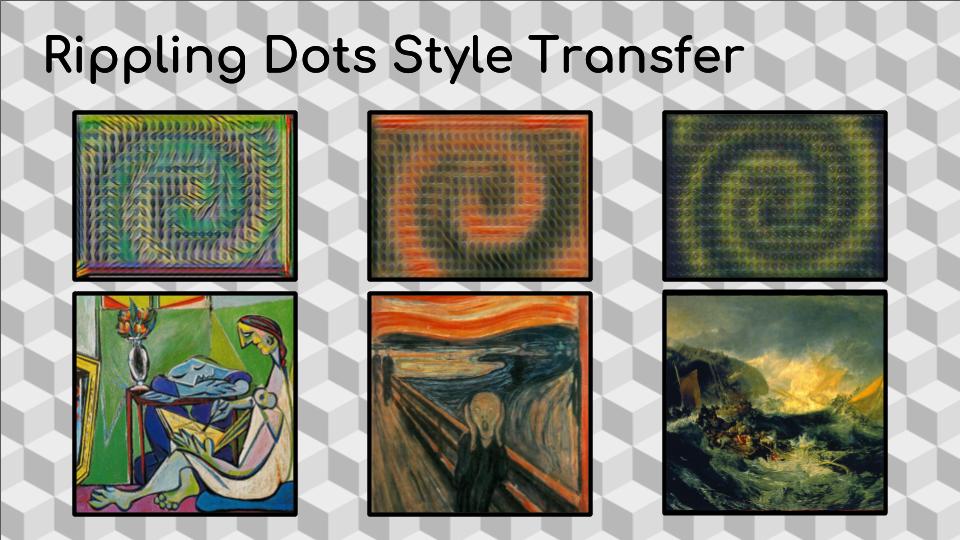

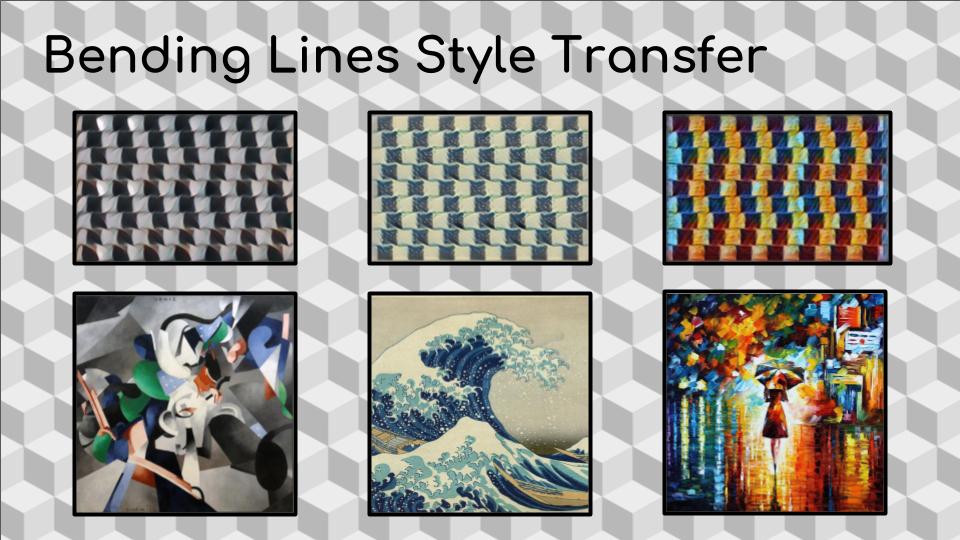

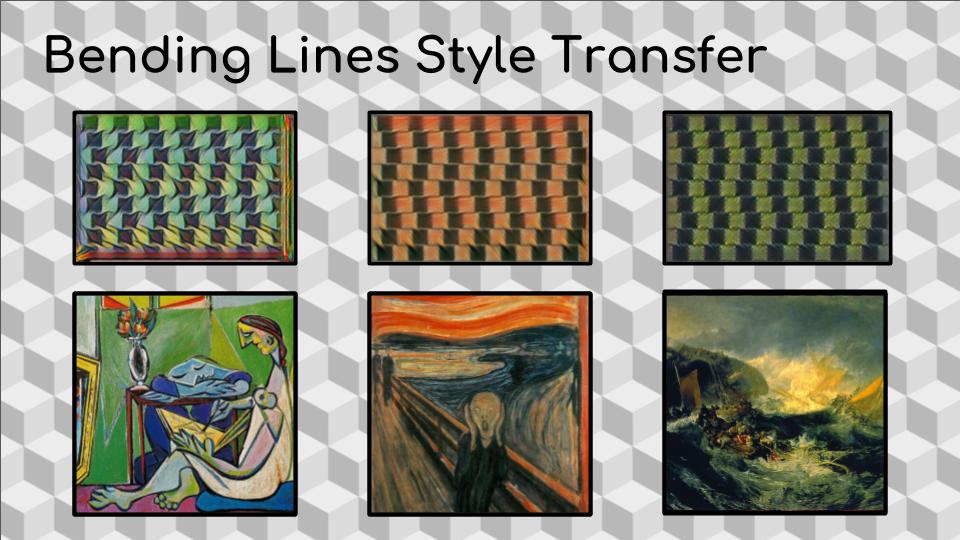

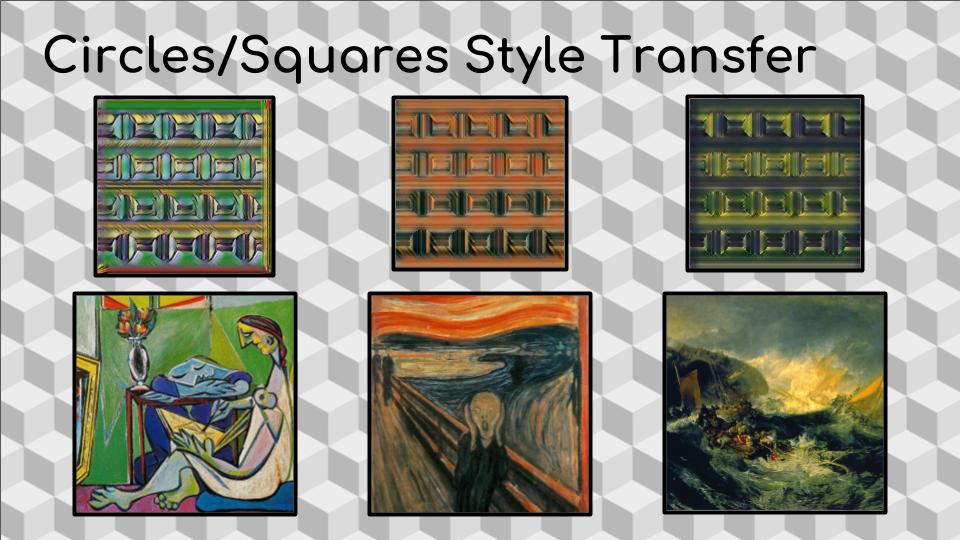

The code linked in the description uses a Convolutional Neural Network that, unlike the traditional Style Transfer optimization-based method presented in Gatys et al., stylizes images hundreds of times faster with the use of instance normalization Whose implementation is further explained in this paper). The code also uses a loss function "close to the one described in Gatys ... and typically using "shallower" layers" (they usedrelu1_1 rather than relu1_2). The faster run times and empirically "larger scale style features in transformations" was an ideal starting point for investigating quickly how optical illusions react to style transfer.

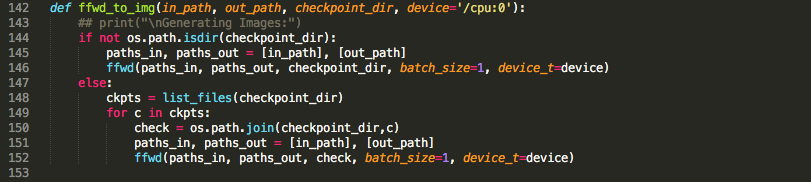

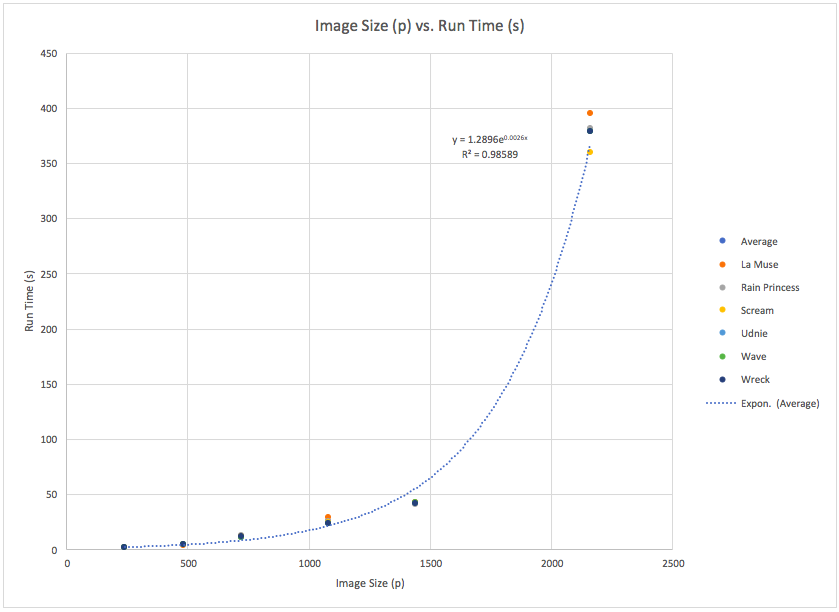

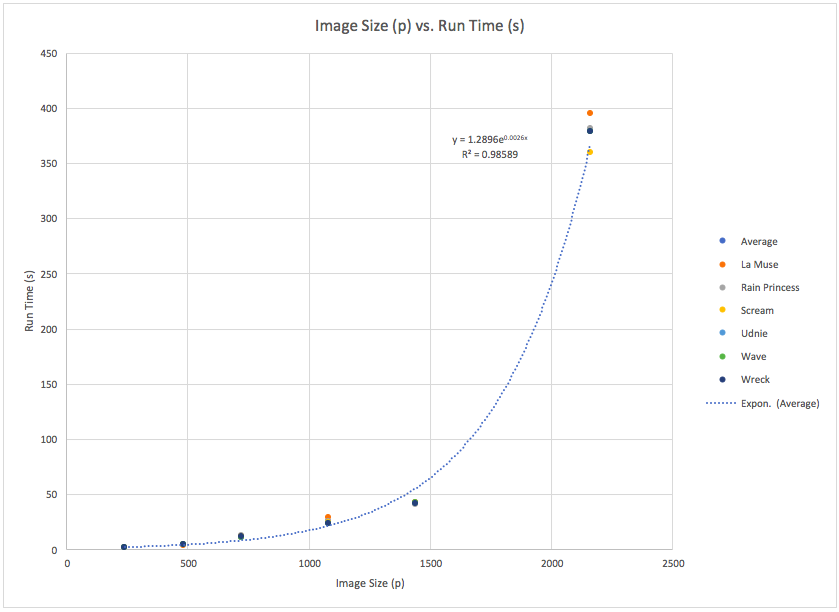

Style Transfer Run Time vs. Image Size

Fast Style Transfer Code Metrics:

Batch Time: ~21 seconds (depending on transfer image size)

- This graph shows the run times of transferring 5 images at differing resolutions (240[, 480p, 720p, 1080, 2160p) into the "wave" style. The original picture was resized using Microsoft Paint to fit each resolution.

- From 240p to 1080p, the run time vs. image size relationship is largely linear (see displayed equation and r^2 value). However, once a 2160p image is processed, most commerical grade computers (this simulation was run on a PC) have to utilize all available memory to process the image. For this reason, our group stuck with content images whose resolutions lie far below the spike in run time present after 1080p.