Our model is based on the model described in “Perceptual Losses for Real-Time Style Transfer and Super-Resolution,” by Johnson et al. Their model is a feed-forward style network that is trained to minimize both content loss and style loss for a specific style image. The following FIGURE 1, taken from their paper, illustrates how an input image is transformed by a network, which is trained to minimize both the content of the original image and the style of another. For each additional style, a separate network has to be trained from scratch.

With our model, we attempted to have a feed forward network for not just the content image, but also the style. In other words, our network takes both a content and style image and with one feed-forward pass produces a stylized image. The actual inputs to the network are the style features from a target style image (extracted from the VGG19 network as described in Gatys et al.) and the raw content image passed through an edge-detection filter.

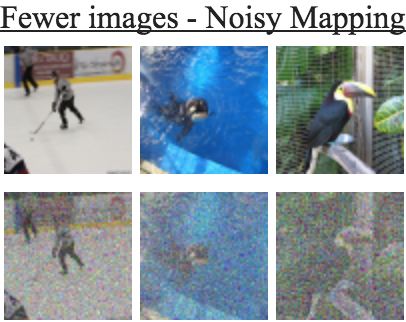

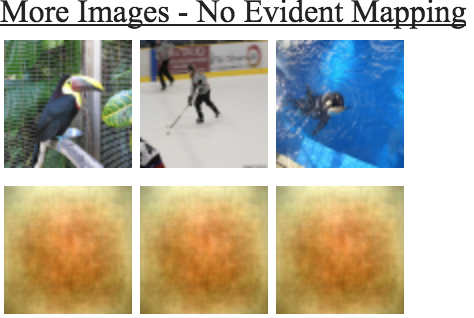

We trained the network to take the style features and the edges of a single image and reproduce the same image. In this way, our hope was that the network could take an edge-only image (FIGURE 2) and impose a style onto it. Once trained, it would then be able to take the edges from one image and the style features from another and combine them in one pass.

More specifically, we used a convolutional neural network to reduce the dimensionality of the style features generated by the VGG19 network. We then concatenated the resulting vectors with the flattened edge-only image to produce one vector containing both style and edge information. That vector then served as the input to a fully connected neural network, the output of which was one vector of size 64*64*3 (64x64 image, 3 color channels).