Model Development

To complete the AI-gym challenge, the player needs to mine three rare minerals in Minecraft. However, to find these minerals, there are many other steps that the player must complete to be able to complete the final challenge, and there are many possibilities along the way. To make things even more difficult, there are many dangers in the world of minecraft that would stunt a normal approach.

To start thinking about the problem, I drew out the minimum possible sequence of steps to go from spawning to completing the challenge. Assuming no other complications and getting the necessary number of resources, the model would have to figure out how to perform and sequence 12 processes, each one dependent on the last.

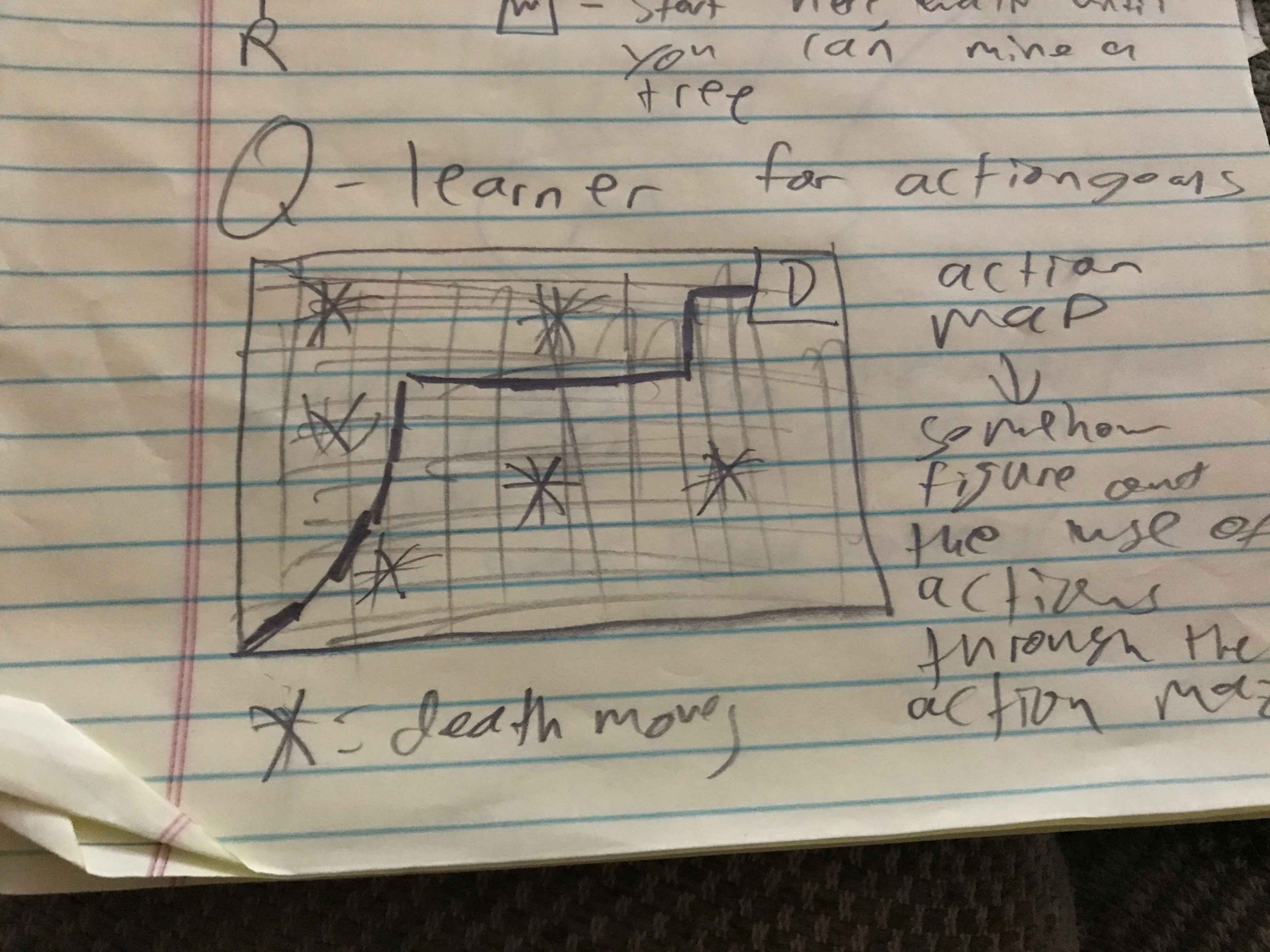

I thought of this problem as a sort of action map, which could have a Q-Learner approach to solving it. The action map is filled with hazards, and so over time the model learns the correct sequence of actions, but this produced a sort of chicken and egg problem. In order to learn what order to the steps in, the program would need to know how to perform the steps, but in order to perform the steps, the program would have to know what steps to perform.

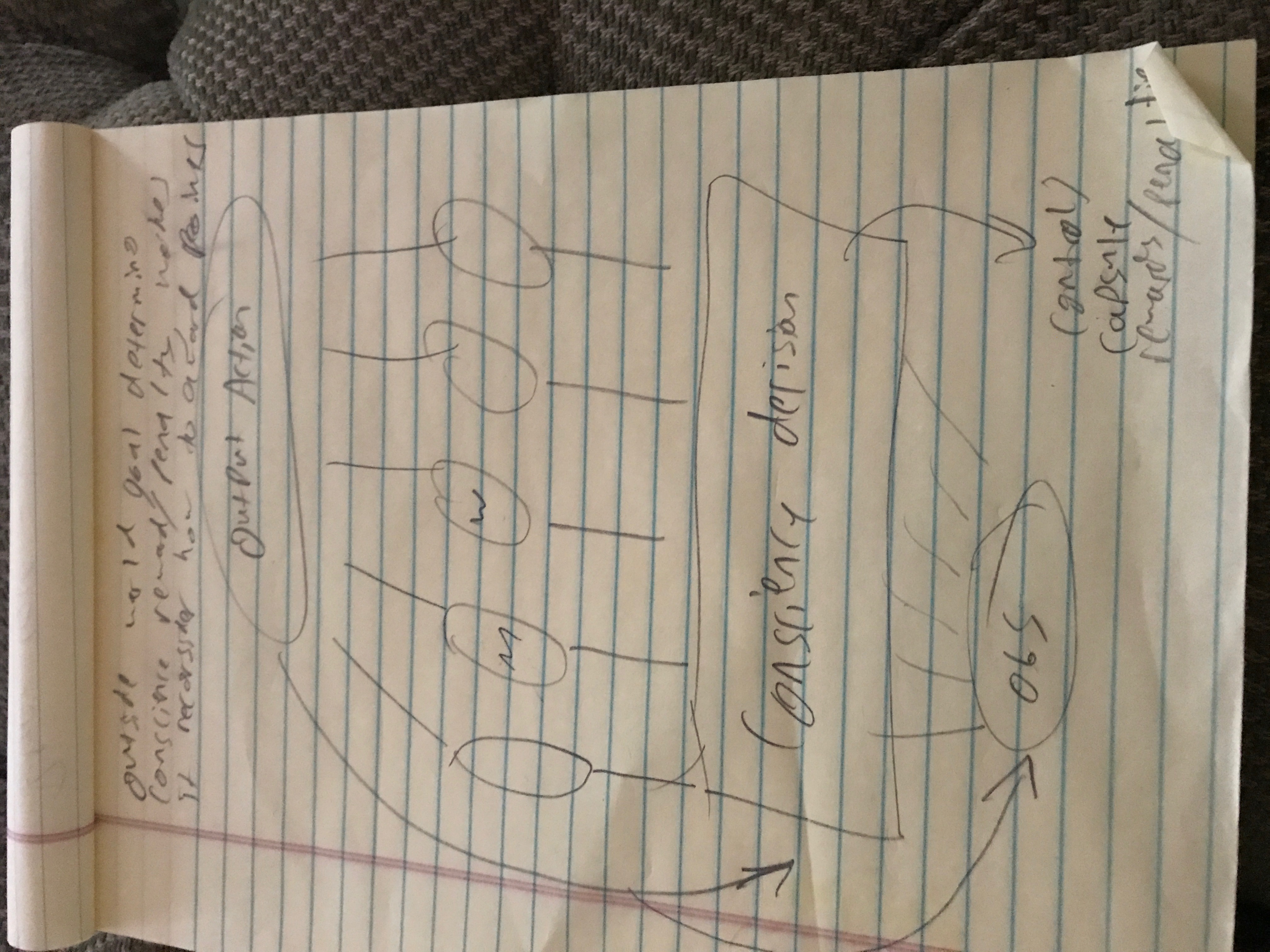

Taking the basic theory of navigating an action map encouraged me to look at the problem from a different angle. Utilizing an implementation of Geoffrey Hinton's capsule theory and a theory of modular evolution in neural networks, I came up with a psuedo-conscience Minecraft Model. The model starts by feeding in a world observation state, with all information relevant to the player at the time. That summary is fed through a neural network that determines what the next goal of the player should be. From then, that goal is sent through the capsule layer to have the player look at a given world_state, and choose the necessary actions to complete the assigned goal.

When the player completes the goal, the "conscience" rewards the capsules responsible to encourage better performance. However, if the starting phase of the model determines a bad goal, the model will be rewarding objectively bad behaviors, so a genetic algorithm is necessary here. To evolve the model, genetic variatoins in consciences ought to be implemented so that the player is making the best plans, and executing the plans as well as possible.

However, due to the complexity of the model, no succesful implementations were reached in the development process

To start thinking about the problem, I drew out the minimum possible sequence of steps to go from spawning to completing the challenge. Assuming no other complications and getting the necessary number of resources, the model would have to figure out how to perform and sequence 12 processes, each one dependent on the last.

I thought of this problem as a sort of action map, which could have a Q-Learner approach to solving it. The action map is filled with hazards, and so over time the model learns the correct sequence of actions, but this produced a sort of chicken and egg problem. In order to learn what order to the steps in, the program would need to know how to perform the steps, but in order to perform the steps, the program would have to know what steps to perform.

Taking the basic theory of navigating an action map encouraged me to look at the problem from a different angle. Utilizing an implementation of Geoffrey Hinton's capsule theory and a theory of modular evolution in neural networks, I came up with a psuedo-conscience Minecraft Model. The model starts by feeding in a world observation state, with all information relevant to the player at the time. That summary is fed through a neural network that determines what the next goal of the player should be. From then, that goal is sent through the capsule layer to have the player look at a given world_state, and choose the necessary actions to complete the assigned goal.

When the player completes the goal, the "conscience" rewards the capsules responsible to encourage better performance. However, if the starting phase of the model determines a bad goal, the model will be rewarding objectively bad behaviors, so a genetic algorithm is necessary here. To evolve the model, genetic variatoins in consciences ought to be implemented so that the player is making the best plans, and executing the plans as well as possible.

However, due to the complexity of the model, no succesful implementations were reached in the development process